Where should you place your dead-letter queues?

author: Vincent Chan

Introduction: Folklore (gossip?)

There is a behavior with AWS Lambda functions that I've experienced and have heard other developers mention but can't recall ever reading about in the AWS documentations.

That behavior is that the number of concurrent executions drops when AWS Lambda produces an error.

That is, suppose you have a large number of messages for your lambda to process, so much so that the number of concurrent lambda executions is at, say, 1,000. From experience, I find that the number of concurrent lambda executions will only remain high so long as all lambda executions finish without error. When an error does occur, I notice a large drop in the number of concurrent lambda executions, say, down to 100.

The occassional failure would essentially cause the lambda function to become a bottle-neck.

When communicating this observation of mine to other developers, I occassionally hear confimations that I am not alone in observing this. I imagine, therefore, that this observation is correct and that AWS probably has it documented somewhere. ('though, where? I dunno.)

This might seem like a piece of folklore (gossip?) unrelated to the post title, "Where should you place your dead-letter queues?", but the two are highly related.

A common pattern for Lambda functions

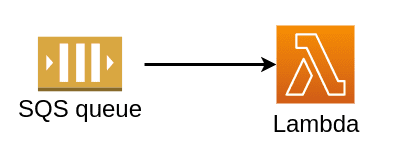

It is common to see an SQS queue in front of a Lambda function.

Without an SQS queue in front of the Lambda, you risk losing the messages your lambda was supposed to process but was unable to because, for instance, of a sudden burst in demand.

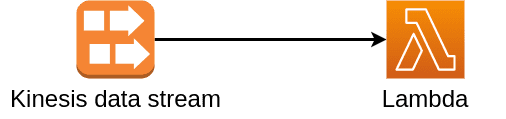

I should add, it is not that it needs to be an SQS queue in front of a lambda, any message broker or queue would do. For example, if you're dealing with high volume or just want to be able to replay the sequence of events, you'd likely replace the SQS queue with Kinesis data stream or Kafka.

Antipattern (No upside; All downsides)

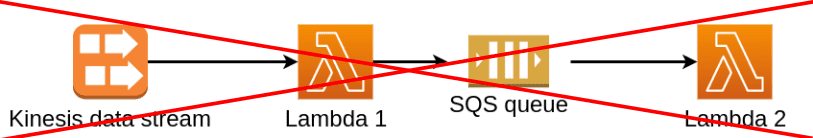

One thing you should not do is put an SQS queue in between Kinesis data stream (Kinesis for short) and Lambda.

There is zero benefit in doing this. There are only downsides.

Not only would you require another lambda to poll from Kinesis and write to your SQS queue, but putting an SQS queue in between Kinesis and Lambda eliminates the advantages that Kinesis would have provided.

For example, Kinesis can accept messages up to 1MB; SQS queues, only 256kB. So, the only way for your SQS queue to function is if your don't allow any messages larger than 256kB to be put into Kinesis. What a waste!

Another nice feature of Kinesis is that they maintain ordering within each shard (a.k.a partition) and they allow you to "replay" the events. Standard SQS queues do not maintain ordering and, so, you lose ordering by putting an SQS queue in between Kinesis and Lambda.

Note: while this can be mitigated with the uses of an SQS FIFO (first-in-first-out) queue, there would still remain the need for an extra lambda just to transfer messages from Kinesis to the SQS FIFO queue and the requiring of Kinesis to reject any messages larger than 256kB.

Don't do this!

Three (four?) different potential places to put a DLQ

There are three (four?) different potential places to put a DLQ in the SQS queue+Lambda setup:

- on the SQS queue,

- on the lambda (by which I mean as a lambda configuration),

- on the lambda, but as a failure destination (like option 2 above, this is also set up by configuring the lambda), and

- inside the lambda (by which I mean to have your code send the message to the DLQ when an error occurs).

For options 1-3, the DLQ / failure destination is set up as a property / configuration of the AWS resource. For option 4, there is no configuration required. Instead, what are needed is the AWS SDK for whatever language you're using (and, this is optional, the setting up some environment variables).

The differences in the behavior of the two groups are as follows.

For options 1-3, a problematic message is sent to the DLQ only after the lambda fails to successfully process it (potential after multiple retries). As such, options 1-3 would all be accompanied with a drop in the number of concurrent lambda executions.

For option 4, the problematic message is handled and sent to the DLQ inside the lambda. As long as no other problem occurs, the lambda would actually finish its execution "successfully." This means that no failure is record and, so, there would be no corresponding drop in the number of concurrent lambda executions.

Below, I've added screenshots of how the configuring of a DLQ would look like (for options 1-3) as well as a sample code showing how you might handle problematic messages inside your lambda (option 4).

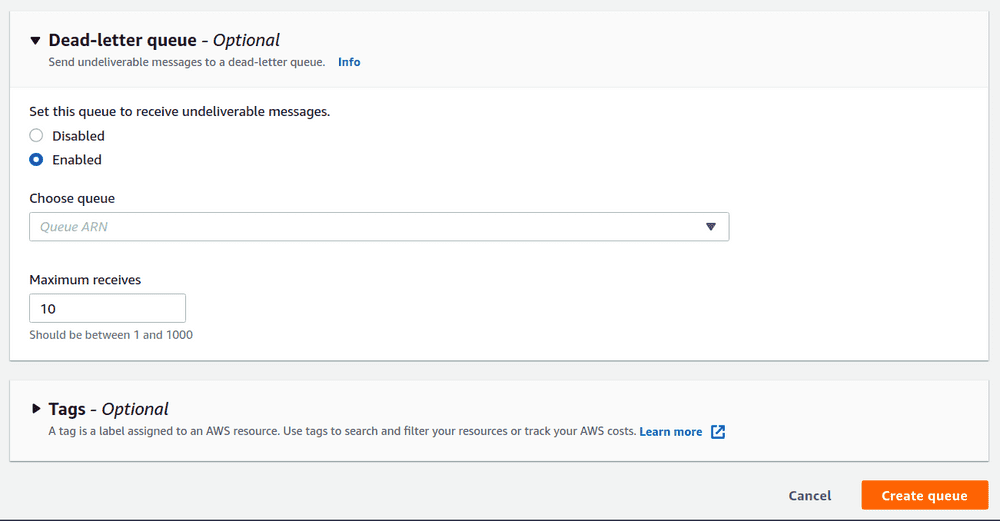

1. [Screenshot] Configuring a DLQ during SQS queue creation

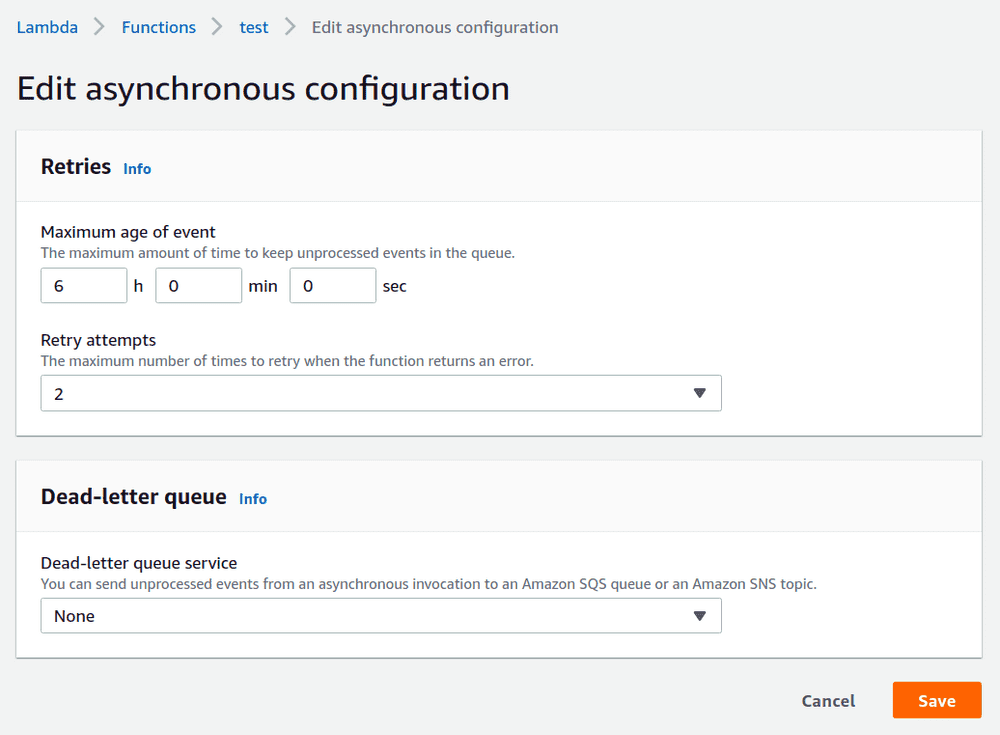

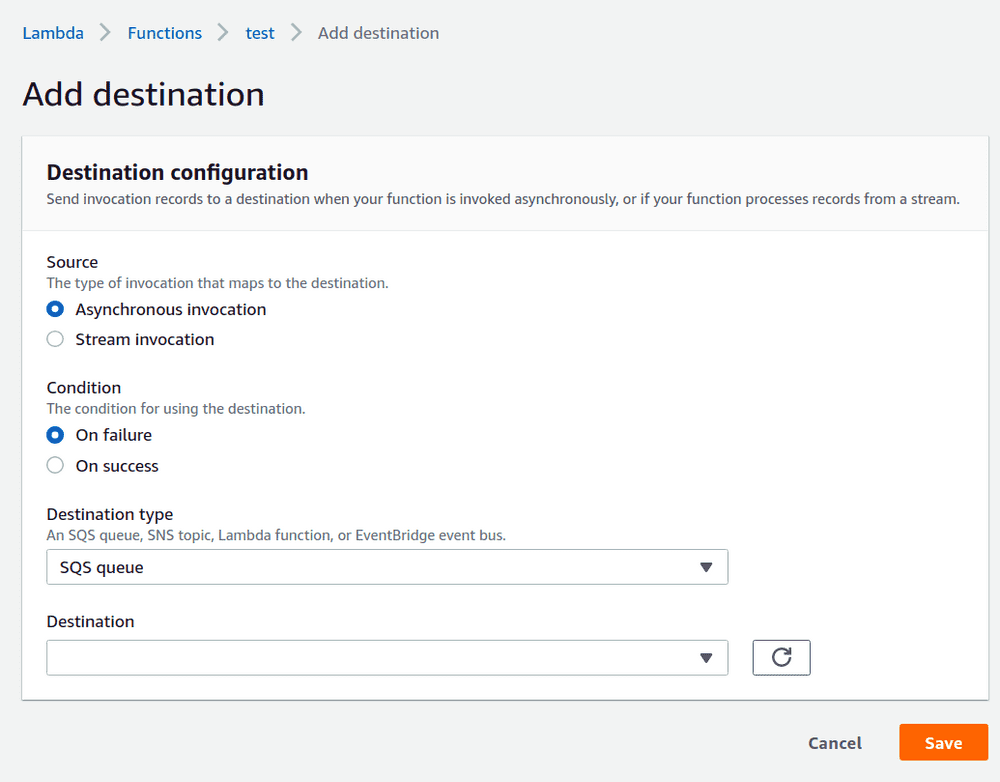

2. [Screenshot] Configuring a DLQ in Lambda

3. [Screenshot] Configuring a Failure Destination in Lambda

4. [Code snippet] Sending Messages to DLQ inside Lambda

The following is a snippet of one way of sending problematic messages to DLQ inside lambda.

Here, the lambda is expecting the events to be coming from an SQS queue.

As such, it checks for the field Records inside event, which would exist if the event is from SQS.

If the event is not SQS, then it sends the event to a DLQ and avoid processing the event any further.

python

import os

import json

import boto3

# Assuming the environment variable `dlq_url` is set up with the proper SQS queue URL

DLQ = os.environ["dlq_url"]

sqs_client = boto3.client("sqs")

def lambda_handler(event: dict, context) -> dict:

if "Records" not in event:

# then the event is not from an SQS queue, don't process

response = sqs_client.send_message(

QueueUrl=DLQ,

MessageBody=json.dumps(event, default=str)

)

return {"success": False}

# else, continue processing as usual

return {"success": True}Conclusion

Despite what might seem to be me making a case for always putting the DLQ inside the lambda (i.e. handled by your code) instead of configuring a DLQ / failure destination as a property of your AWS resource, I actually think that which choice you make will depend on your situation.

Afterall, it isn't as if options 1-3 that I listed above are without benefits. By letting the lambda fail, because you'd experience a drop in the number of concurrent lambda executions, you gain the following. You gain

- more accurate statistics on how your lambda function is doing (this can be especially important if you have CloudWatch alarms set up based on those statistics) and

- you get a drop in the number of concurrent lambda executions. (If the problem is with the code you deployed, then errors may be frequent and you probably don't want to be spending a lot money just to transfer messages from one queue to another. The drop in the number of concurrent lambda executions would decrease the number of those messages being uselessly transferred from one queue to another.)

My current feelings on the matter is that, for most cases, just set up the DLQ on the SQS queue in front of the lambda. That's probably the simplest option. Plus, you get to specify the max number of retries before the message is sent off to the DLQ. So, that's nice.

The main situation where I'd want to handle problematic messages inside the lambda is when the lambda is responsible for processing a lot of messages and we definitely don't want a bottle-neck. For example, if updates are constantly being sent to the SQS queue in front of the lambda for processing, then I'd likely opt for handling problems solely from within the lambda. This way, other updates are not prevented from occurring.