Analytics pipelines

author: Vincent Chan

Okay, we all know that it is important to collect data. After all, they provide insight into what's working and what's not.

As a result, countless many articles and blog posts have been written on not only helping people understand the importance of data and its collection, but also on the many different analyses that can be performed as well as their pros and cons.

Although, personally, I haven't had much luck in finding articles that describe how to go about collecting said valuable data, beyond just hearing someone say "use Google Analytics" of course.

I am, however, a cloud developer, and would rather have a better understanding of the process if not build it myself.

Besides, building it yourself does come with the added benefit that you get to make sure that you're collecting the data you need and that you're not collecting data that only serves to invade your user's privacy.

And so, here, in this post, I will describe a simple analytics pipeline, as well as how to build it (in broad-ish strokes).

Collecting data for basic webpage analytics

Let's start with the basic, but important, question of "How many visitors do each of my webpages get a day?".

The analytics process here is pretty straight-forward. When a user goes to one of your webpages, his or her browser is downloading the html and related files (such as javascript and css) so that it can render the webpage for you. The idea is to include a javascript script that executes once the webpage has been rendered. For our example here, it suffices for the script to make a POST request to our API server with a few pieces of information such as

- the url to the page itself and

- the time the page was accessed.

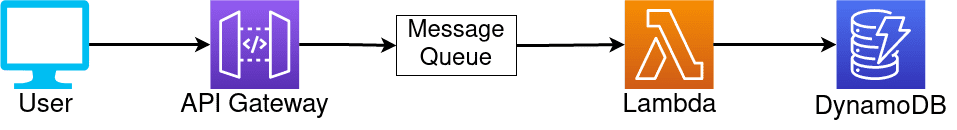

To make this more concrete, consider the following diagram, which uses AWS services but can be replaced with whatever analogous service the reader prefers.

Suppose a user viewed the page

Suppose a user viewed the page /posts/some-post at 2021-03-13 12:34:56 UTC, then the steps are roughly the following:

- The page has finished rendered and so it makes a POST request to our API Gateway.

For example, we might have a javascript function

javascript

and then have our webpage call that function once it has been loaded:const send_metric = (page) => { const date = new Date(); const time = date.toISOString(); fetch(`www.apigw.com/prod?page=${page}&time=${time}`, { method: "POST" }); };html

Alternatively, to get the script to run upon the webpage being loaded, we can use libraries such as jQuery<body onload="send_metric('/posts/some-post')"> ... </body>jQuery

or frontend frameworks such as React$(document).ready(function () { send_metric("/posts/some-post"); });ReactJS

useEffect(() => { send_metric("/posts/some-post"); }, []); - Our API Gateway takes the request puts it into our message queue.

Note that the message/event (a JSON document) sent to the lambda contains a

queryParametersfield, which would look roughly likeJSON

{ "page":"/posts/some-post", "time":"2021-03-13T12:34:56Z" } - Our lambda then takes those two pieces of information and writes it to a DynamoDB table

(let's say the partition key is the page path,

/posts/some-post, and the sort key is thetime,2021-03-13T12:34:56Z)

With that, you now have a database (DynamoDB) table where each row represents a page view and, from the row's data, you know which page was viewed and when.

Problems with the basic pipeline

A problem with the basic pipeline shown above is scalability. Since each page view triggers a call to the API Gateway, which in turns passes the message directly to the lambda, it can be very inefficient once traffic picks up.

The pipeline, as designed above, only allows the lambda to process 1 event/message at a time. So, when there is a lot of traffic, the lambda will be making a lot of individual writes into the database. If an error occurs (perhaps too many writes in too short a time was attempted), that piece of data can be lost forever.

Modifying the pipeline

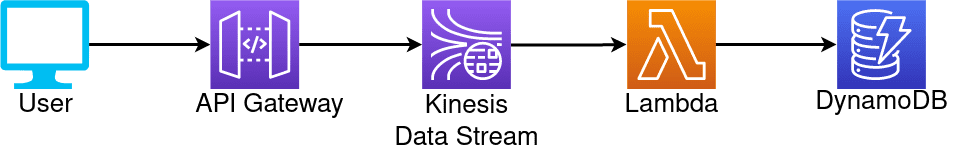

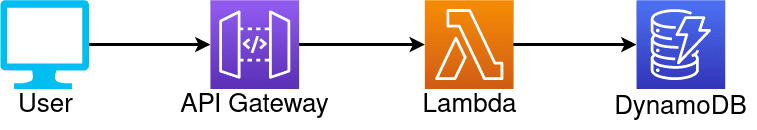

Our only choice left (I realize I didn't explicity list this as an option, by the way) is to modify the pipeline. Luckily, it is a small modification: we just throw a message queue or broker in front of our lambda. API Gateway is well capable of putting messages into message queues for us.

This would prevent the lambda from having to process the messages sent from API Gateway immediately. Instead, the messages could line up, or queue up, in the message queue and the lambda could then reach out to the message queue for messages whenever it has capacity. Hence, message queues and brokers help prevent the lambda from making too many write requests too quickly (also known as "write throughput errors").

Further, message queues and brokers have additional safety features that prevents messages from being lost or unintentionally deleted. I will avoid describing the mechanics of these features, in part because how it works depends on the queue or broker. However, the point is that if, while processing a message, the lambda throws an error, the message will still be available in the message queue and, so, no data is lost.

At this point, I should note that having a message queue or broker does not reduce the number of writes you'll have to make to your database, DynamoDB in our case. What it will do is help prevent lambda from having to make too many writes too quickly.

Using AWS services, we have two main options:

- Simple Queue Service (SQS) and

- Kinesis Data Stream (similar to Apache Kafka for those who are familiar with it).

There are advantages and disadvantages to each that I'll be explaining below.

Using SQS

Brief background on how SQS works with lambda: Lambda asks the SQS queue if it has messages available for it to process. If it does, then the SQS queue will provide a copy of those message for lambda to process and mark those messages in the queue as unavailable. (This asking and receving process is called "polling.") When the lambda is done processing the messages, it sends back a notification to the SQS queue that it has successfully processed said messages. The SQS queue then deletes the messages from its queue so that it's not re-processed. If the SQS queue does not receive the success confirmation in time, it'll then make those messages available to processing should a lambda asks for available messages in the future.

Despite how the diagram looks, SQS does not push messages to lambda to be processed. Instead, it is lambda that asks SQS to give it messages available to be processed in a processes known as "polling." Because SQS allows lambda to poll for up to 10 messages at a time, the number of lambda executions can potentially be reduced by 90%! Of course, that is only the case if the SQS queue constantly has a backlog of messages to be processed.

Another benefit to lambda being able to poll SQS for up to 10 messages at time is that this also means that the lambda can then make a single batch write request to DynamoDB for all 10 messages's content be added (batch writes).

Note the awkward language here (batch write request). It is 1 batch write request, but it will still consume 10 WRUs in DynamoDB nonetheless. And, to be sure, this is still helpful. It is helpful because only 1 POST or PUT request is made instead of 10 should each write request be made individually. That will save time which, in turn, translates to saved money.

Using Kinesis Data Stream

Brief background on how Kinesis data stream works with lambda: With Kinesis Data Stream, lambdas can be either producers or consumers. A producer lambda is just one that writes, or puts, messages to the Kinesis data stream. A consumer lambda is, conversely, one that reads, or cosume, messages from the Kinesis data stream. Unlike SQS, Kinesis data stream does not delete messages after it has been consumed and processed. Rather, it is that the consumer has something like a bookmark to keep track of its place in line (there're a lot of details I'm leaving out, but I think that might just confuse people). So, every time lambda polls kinesis, it is (in the background) letting Kinesis data stream know its place in line. And every time lambda finishes processing the messages, it updates its position in line before asking Kinesis data stream for new messages. If, during the processing, an error occurs, then the lambda would just ask for the same messages again since messages are not deleted (although, messages that are too old are automatically removed).

Much like SQS queues, Kinesis data stream will allow the lambda to process messages at its pace. However, there are differences to note.

The first is that Kinesis data stream does not delete messages. Instead, it is just that messages older than a preset TTL (time-to-live) gets automatically removed. What this does mean for us is that there is an additional storage cost associated with using Kinesis data streams.

The second is that Kinesis data stream allows for far more messages to be polled at a time. Typically, I'd see people set up their kinesis-lambda connection (event-source mapping) to have lambda poll upto 200~300 messages at a time.

Which to chose?

For our case at hand, I think I can provide a straight-forward recommendation as to which to use. Simply, since SQS is the cheaper of the two, I'd recommendation using SQS to start with until the traffic is so great that you see a backlog building up. When you do notice a backlog, switch to Kinesis data stream for the greater throughput.

Reading the data

Thus far, we have been focused on how to send and collect data. Here, we'll quickly go over how to read your data and what consequences that'll have for our architectural design.

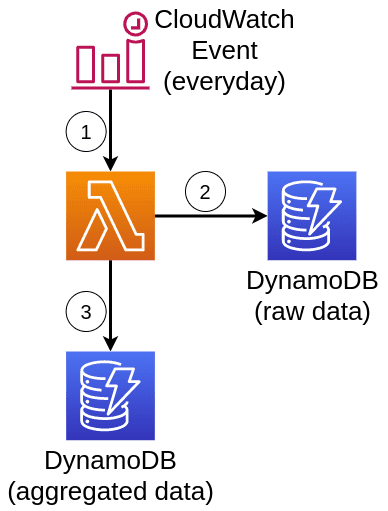

In our case, despite having painstakingly collected the data (or, at least, designed a pipeline to collect said data), the individual data (datum?) is not so important. Remember, the question we have set out to answer is "How many visitors do each of my webpages get a day?".

To do this, we need to do a count operation (even if an approximate one). And, so, the basic idea is to perform the count operation daily (for the past day's data) and store the result elsewhere.

Steps:

- A CloudWatch time-based event is triggered once a day (assuming what we care about are the daily metrics), executing the lambda,

- the lambda then queries the DynamoDB table hosting the raw data for all data from the past day, and

- after doing whatever aggregation it needs to do (just counting in our case, but can be much more complicated if collecting more detailed data), write the results to a differet table.

Then, when your analyst (or whoever) wants to check out the daily page view, they can read from the aggregate table.

Choosing which database to use

In our post, we've been using DynamoDB, but there are other choices out there. One that I think is particularly appropriate for our use case (analytics) is AWS Timestream. Timestream, from what I can tell, is a specialized version of PostgreSQL, meant for time-series data.

Both DynamoDB and Timestream are serverless and highly available, meaning that AWS will handle the scaling and replication for you. The differentiating factors between the two are (from my point of view and for this use case) cost and schema flexibility.

Cost comparison

For a cost comparsion, I have made a few assumptions, including

- that all reads and writes occur withing region (so as to ignore data transfer fees),

- that all reads and writes use up 1 entire read request unit and 1 write request unit, respectively,

- that the read load and write load are not relatively predictable (since there is no provisioned read/write units for Timestream),

- and that queries for Timestream is rougly comparable to reads for DynamoDB.

For the us-west-2 (Oregon) region, I find the following:

Storage:

- DynamoDB: $0.25 per GB-Month after the first complimentary 25 GB-Month

- Timestream: $0.03 per GB-Month if using magnetic storage

Reads / Queries:

- DynamoDB: $0.25 per GB

- Timestream: $0.01 per GB scanned

Writes:

- DynamoDB: $1.25 per GB

- Timestream: $0.01 per GB

So, overall, I find Timestream to be much cheaper than DynamoDB.

Schema flexibility comparison

As a specialized version of PostgreSQL, Timestream is still largely a traditional relational database management system (RDBMS), by which I mean that the schema is rigid: the columns must be known and defined beforehand. On the plus side, there is much more support for time-series data (DynamoDB has no such support).

DynamoDB, on the other hand, is a NoSQL database. Specifically, it is a key-value store where the value has few constraints. In essence, you can set the value to be a JSON-object of your choosing.

In short, schema-wise, the debate between Timestream and DynamoDB is largely the same as debates about relational databases and NoSQL datases. Writing data to a relational database table is hard (comparatively), but reading or querying is easy as they provide a lot of support. Writing data to a NoSQL database tends to be easy as many are flexible with respect to the table's schema; however, reading and querying becomes difficult as much of the logic for more complicated queries will have to be off-loaded to the application and not the database itself.

So, which should you choose?

Given that our conclusion from the previous section is that relational databases are hard to write but easy to read, and NoSQL databases are easy to write but hard to read, I would recommend starting with a NoSQL database and then transition to a relational database when your schema has become stable. This will provide you with the flexibility you need as you iterate upon your MVP but will also provide you with lowered operational cost later on.

Conclusion

In this post, we have gone over together a simple analytics pipeline and there are a number of variations and additions I wanted to cover, including

- tracking how often resouces (not html pages) have been downloaded,

- A/B testing for webpages,

- A/B testing for email campaigns,

- A/B testing entire websites via CloudFront functions, and

- archiving data for future analysis purposes.

However, I feel this post is long enough as is.

Lastly, I just want to share some slightly tangential thoughts of mine on this topic. I think you'll agree with me that, as this post has shown, it is not difficult to collect information on unwitting visitors to your website. (To note: although I did not record the user's IP address in our example, that is a fairly easy task.) This just points to how easy it is for our movements on the internet can be tracked It is particularly scary since the data tracked and collected by one website is often shared among (or just sold to) other advertisers, as well as how, sometimes, data scrubbed of identifiable content can still be traced back to an individual. Anyway, just some scary food for thought.